1. Glossary:

-

parameter: Any kind of variable that is configurable for an e2e test. E.g:

- Fixed or latest version of WordPress

- Fixed or latest version of any WP plugin (+ any configuration of that plugin)

- Any configuration (database)

- Any custom WP configuration that can be done through the WP CLI

- Any media uploaded

- Custom post contents (with embeds or any kind of weird content)

-

phase: Each parameter belongs to one of 3 phases: build & serve, docker-image, or data/plugins. They are explained in details below.

-

workflow: The github action workflow that is used to run the e2e tests. It will be defined in a workflow file called something like docker-e2e-wp-test.yml

-

container: A container refers to a docker container. In the context of the e2e tests, a new container will be used for each unique combination of parameters.

-

WI: A WordPress instance that is used for testing. Each container has its own WordPress instance. This WP instance can have any kind of configuration as defined by the parameters.

-

test case: It’s the single test that is going to be written using jest or cypress syntax and run with either one or the other tool respectively. It is defined using the test() or describe() function.

2. There are 3 types of phases

We can be we call them phases because each of the types is executed at a different time.

- the build and serve phase - we can build the frontity app with different

--publicPath s or -target s

- the docker image phase - we might need different versions of Wordpress or MySQL and those only come on different docker images. So we have to be able to tell

docker-compose to pull different images from the registry.

- the data/plugins phase - this is all the remaining configuration that we can do inside of the docker container using the WP CLI: install a plugin, add media, create a post or even load some data into the DB.

3. The key problem that we are facing is:

- Run the smallest amount of containers possible (only one and not more for each unique combination of parameters)

- At the same time be able to specify unique parameters for each test

- Somehow, be able to specify how many containers and with what parameters to launch “ahead of time”. Github actions do not allow us to create containers dynamically - they have to be hardcoded into the workflow file in the

matrix parameter !

So, this means that each test case can use different parameters (for each phase). But we don’t want to spin a new container for every test case, we want to do that only if some parameter is different.

Example:

- We have 5 test cases that use the same build & serve and docker image phases.

- The first 2 need the

head-tags plugin to be active

- The last 3 need the

head-tags plugin to be inactive

We don’t want to spin 5 containers because we only need 2 of them.

But how do we communicate to the workflow those requirements that are defined for an individual test case so that we can launch a minimal set of containers?

Github actions require us to hardcode the “matrix” of possible container types in the workflow file… I’m going to explain further, hold on

Proposal

For the “build and serve” phase:

First, I need to note that in order to make the workflow work we need to add a flag for the build command to use a different output directory than ./build. So, when running npx frontity build, the files are built into another directory. This should be a trivial change, in fact looking at the code for the build command it has already been planned.

Analogously we also need to change the serve command to have a flag to look for the build files in another directory. Likewise, a simple change.

Because we need different builds for different test cases, I think we have 2 options here:

option 1 - build a separate frontity app for each test case

This is a bit wasteful, but because all tests can run in parallel (more on that later) the time complexity is still basically O(1) for this. Then the app can just built and served without any extra steps. For any node unit tests this should be simple by using the programmatic API of build and serve, however in the cypress tests I think that we’ll have to use tasks because we’re not allowed to run server-side code in the test case directly.

import { build } from '@frontity/core';

let hash; // this is gonna be the build folder's name

beforeEach(async () => {

hash = getUniqueHash(); // sth like `940utgh8v923q4r`

const await build({ 'production', 'module', '/some/publicPath/', hash });

await serve(hash);

})

option 2 - build all the different possible versions of the app ahead of time

For each possible combination of build & serve parameters, we can build the app and put each of the builds in a unique build folder and then run each one on a separate port, like:

| target |

publicPath |

port |

| default |

default |

3000 |

es5 |

default |

3001 |

| default |

/some/path |

3002 |

etc.

I think this mapping of parameters ==> port number can be fixed and we can rely on a convention inside the tests to know which app we connect to. That is to say that e.g. port 3001 will always and only run the frontity app with es5 target and default publicPath (according to the table above).

This way, let’s say that we have a cypress test like:

it("should show the thing on click", () => {

cy.visit("http://localhost:3001/?thing-on-click");

cy.get("#thing").click();

cy.get("#other-thing").should("exist");

});

The fact that we are accessing localhost on port 3001 tells us that we are accessing frontity app with es5 target and default publicPath .

For the docker image and data/plugins phase:

I propose that we divide the e2e workflow into two separate jobs:

-

pre-processing job

-

test job

The pre-processing job will be responsible for literally “pre-processing” the test files in order to figure out the minimal set of containers to launch. I’m still a little bit fuzzy on all the details but I believe this can be done. More details in the next section further down below

The “actual” test job will run the e2e tests, basically do all the things that we expect from a test, etc.

Remember when I mentioned this:

Github actions require us to hardcode the “matrix” of possible container types in the workflow file… I’m going to explain further, hold on

However, github actions allow passing information from one workflow job to another, including outputting information that can be used as a matrix for another job. We can use the “output” feature of github actions to collect the information in the pre-processing job to create a matrix of containers for the test job (example):

### e2e-docker-wp-test.yml

name: e2e-docker-wp-test

jobs:

pre-process:

runs-on: ubuntu-latest

outputs:

matrix: ${{ steps.set-matrix.outputs.matrix }}

steps:

- id: set-matrix

run: npm run pre-process | "::set-output name=matrix::{toJSON{{output}}}"

// sth like that - it's not the exact syntax

// The `run-process` npm script should return the final matrix to standard output

test:

needs: pre-process

runs-on: ubuntu-latest

strategy:

// this is the matrix of all the containers necessary for the test job

matrix: ${{fromJson(needs.job1.outputs.matrix)}}

steps:

- run: npm run test

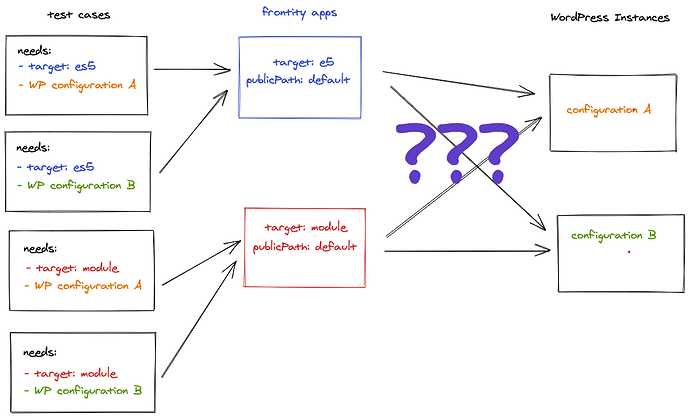

Connecting the WI to the frontity apps

Right now you might observe that we have built and served the frontity applications but do not yet have the WI. This poses a problem:

How do we connect the frontity apps to the WI? The frontity apps are built and served before we know what the parameters of the WI are. Specifically, we do not know which WI each frontity app should connect to for a specific test case! This can be summarised as:

I think I ll need some input for how to make this work. My initial idea was that the server could expose some kind of API for changing the WordPress REST API but I’m guessing that this is terrible from a security perspective. Perhaps the same could be accomplished with an environment variable on “per request” basis. I’m not entirely sure.

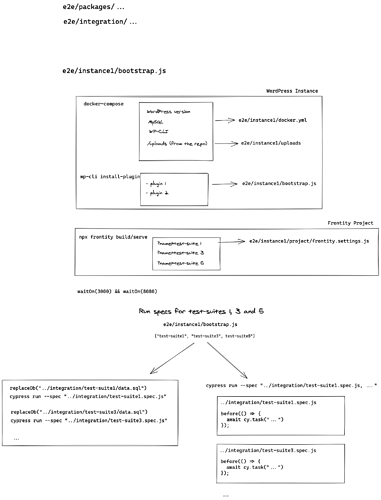

How is the actual pre-processing job going to work?

I’m not 100% sure on all the details but let’s review the types of parameters that we will have to “pre-process” and in what format they come:

1. Fixed or latest version of WordPress

For this, we simply have to specify a version as a string like: "5.1" or "4.8".

2. Any configuration (database)

The database configuration can be stored in a uniquely named folder. This folder would store the SQL file with the database config. So, what we would need here is a path to <folder>/data.sql

3. Fixed or latest version of any WP plugin (+ any configuration of that plugin)

Same as 2. This will be defined in the data.sql database dump.

4. Any custom WP configuration that can be done through the WP CLI

This configuration is a string that is basically a bash command (or a list of commands)

5. Any media uploaded

Same as 2. This is a path to a folder that contains all the media.

6. Custom post contents (with embeds or any kind of weird content)

Same as 2.

The main thing to observe is that each of the 1-6 parameters is “hashable”. This means that we can compute a hash for the combination of all parameters for each test case and launch one container for each unique hash.

I’m not sure what is the best way to “run” the pre-processing but my best idea was to use a babel plugin.

This way, we could put the configuration inside of the test case as a “magic comment” inside of that test case and hash the contents of that magic comment.

Actually running the tests

Assuming that we have now launched the frontity apps and the WIs, we have to run the all and only tests that should be run for that particular frontity app and WI.

I think that this can be accomplished with the same mechanism that I mentioned earlier that creates the "matrix" of containers. The npm run pre-process script can return the names of the test cases that could then be passed as parameters to jest or cypress inside of the workflow file.

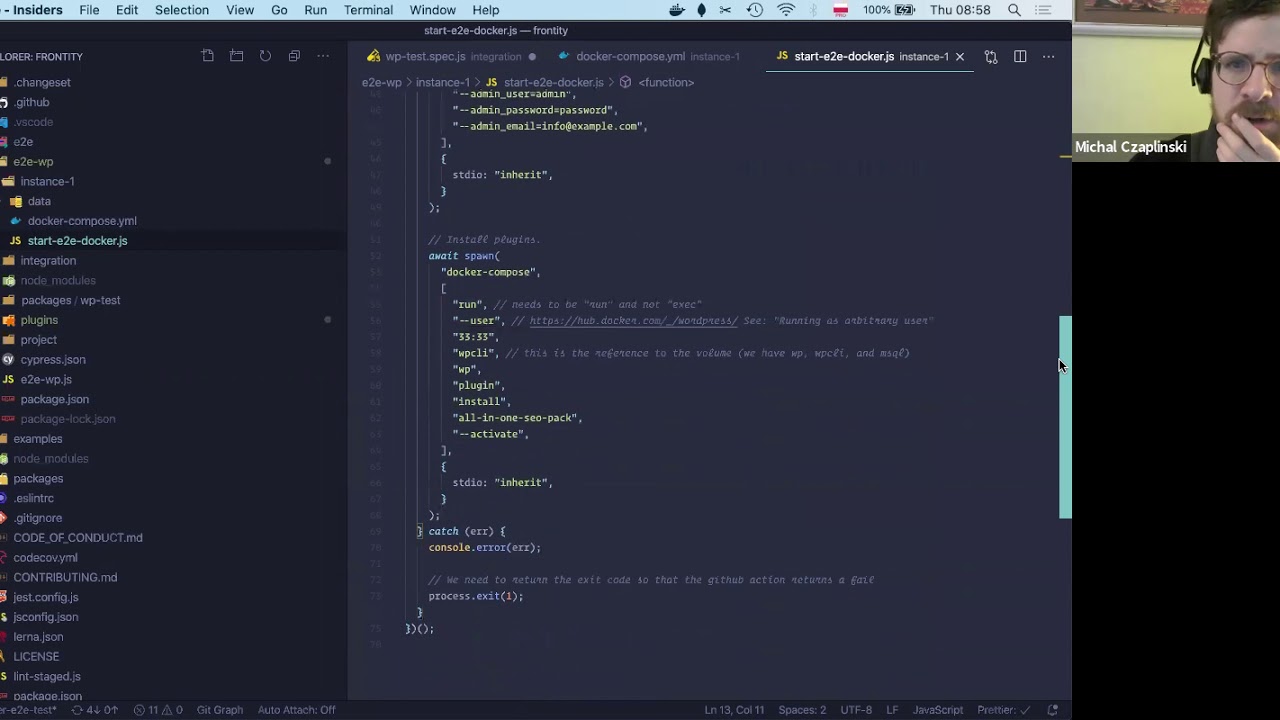

Using built-in github services instead of custom

We should make use of the the built-in github service containers instead of running docker-compose ourselves. This should let us avoid some of the overhead of launching containers ourselves.

This is up for discussion still. Is this sufficient…?

This is up for discussion still. Is this sufficient…? Side note about scripts

Side note about scripts Note that each

Note that each

I think

I think  for 1. and 2. In the PR the files and folders are a bit of a mess right now, but that was my idea as well. Same for adding extra scripts to ease local development.

for 1. and 2. In the PR the files and folders are a bit of a mess right now, but that was my idea as well. Same for adding extra scripts to ease local development. I think you explained it a bit better with the drawing.

I think you explained it a bit better with the drawing.

I’m trying that approach now.

I’m trying that approach now.