This is rambling experimental post, and I want to warn you I have very little to no experience in Frontity. These ideas may have been explored already/before – so apologies for the duplication if so.

As it happens, I was recently working on a prototype in essentially re-implementing the WordPress theming system in React – a highly coupled / embedded approach to writing WordPress React sites. I’ve pushed some non-working (well, works for me only) code to https://github.com/joehoyle/r/ and /joehoyle/r-example-theme if anyone is over-curious. In doing so, Ryan McCue recommended I might want to check out Frontity to see if efforts could be combined.

My main goals were essentially to have a WordPress theming framework that allows you to create Header.tsx just as you would header.php. R supports SSR only, Client only or hydrated. It basically maps WordPress rewrites to react-router automatically and implements the WP template hierarchy. A (maybe) novell thing with the approach is to run the v8 engine embedded in PHP via the v8js PHP extension which means there’s no need for a Node server for SSR, and you can cross-call between JS and PHP. The main advantage to that is being able to locally route REST API requests to not actually use the network in SSR mode (via rest_do_request()).

So, long story to say I essentially tried to go down the same route with Frontity: Implement a Frontity server in PHP/v8 that can provide SSR with your existing WP server alone, providing tighter coupling / integration (shock!) between the WordPress site and Frontity. Probably a major aside: I personally don’t find decoupled highly attractive, running two services (WP + node), caches, deploy workflows etc isn’t that appealing to me. I still want to build WordPress sites, but in React and have JS as first-class, not “progressively enhance”.

How did it go? Well I spend a few hours on building a new server that uses v8 in PHP-- I guess it’s not really a server per-say, more like a simple script that will render the page for a given context/url and return it back to PHP (with all the fetch shimming to internally service REST requests).

I got to the point where I was able to build a bundle (using esbuild (more on that in a bit)) that is able to execute in v8 – no small feat, as anyone that might have had to work in a non-node, non-browser JS context before. Essentially this means building for the browser context, but polyfilling missing libraries like URL, fetch etc.

It was all going swimmingly until I hit a snag with the components that use loadable. Again, I don’t know much/anything about loadable (there’s a theme here), but from what I gather it’s hard-tied to Babel and there’s some trickery needed to load loadable components synchronously. I wasn’t able to get any further yet – I have SSR working for the header / menu parts of the mars theme. The pre-population of data over the v8/php bridge is working too. I’m currently using renderToStaticMarkup as hydration requires a lot more complexity in grabbing the loadable chunks etc.

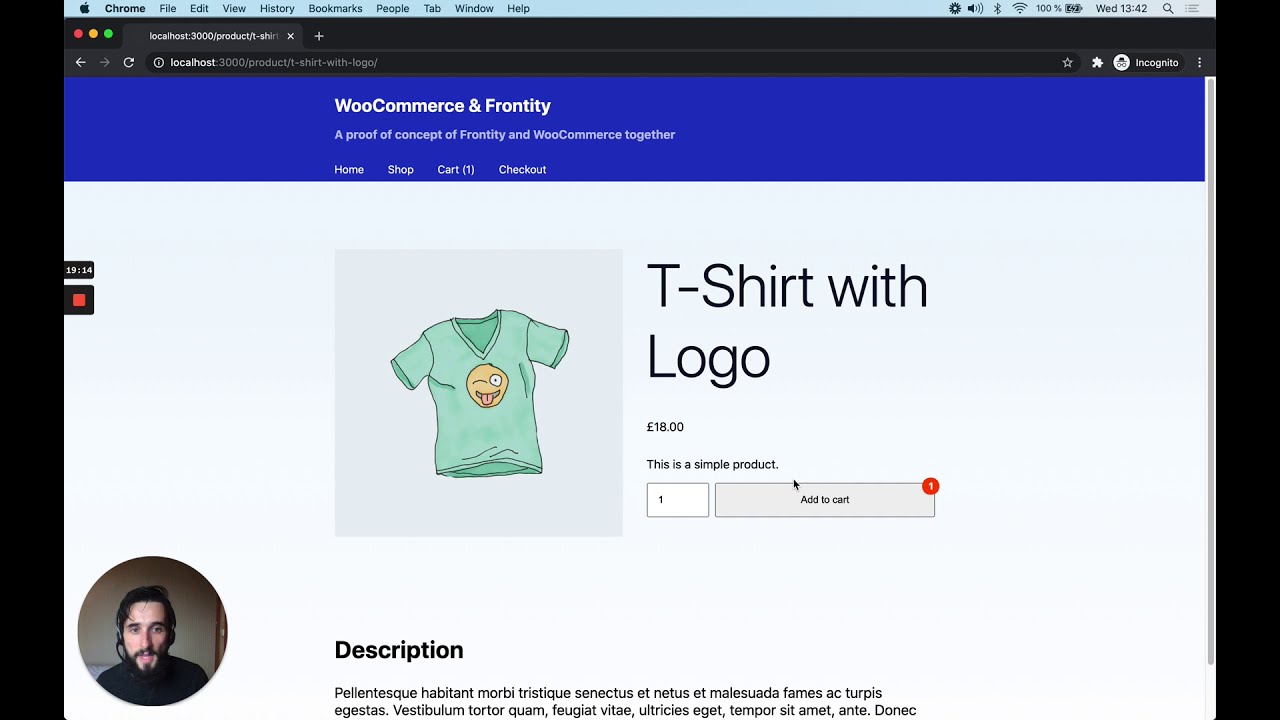

Here’s where I got too (no client rendering, server rendered in php-v8js)

At the moment I’m using esbuild to bundle Frontity and all the deps (side note: has anyone used https://esbuild.github.io/, it is AMAZING!. Unbelievably fast, and a simple but powerful plugin API). However, there does appear to be the blocker that loadable requires babel, so it looks like I might need to switch over to that and downgrade from 120ms total build times to more like 2 minutes with webpack+babel!

I do wonder if maybe I am doing something wrong with loadable, as when using renderToStaticMarkup I think it’s meant to sync resolve the modules (which are in my bundle), but alas, I didn’t work that out yet.

So, that’s where I got to. I also just discovered this community forum so I thought it was probably time to ask if this has all been tried before, or if it’s at all an interesting direction to pursue. I did stumble on frontity/frontity-embedded-proof-of-concept, which looks like it might have similar goals to more tightly integrate Frontity with the WP site. That’s kinda also what I’m looking at – but ultimately I think a lot tighter with doing SSR via PHP too.

I didn’t push up any of this code yet – it basically ended up being a fork of R but instead of rolling my own react layer, it attempts to switch in Frontity to do what it does best. The code is in even worse shape than R (and that’s saying something!)

Happy holiday hacking!

I dream for a new supported extension that embeds V8 with support for things like the v8 debugger protocol, ESM and the like. Again, I think you have absolutely made the correct decision by not pursuing this as Frontity. Personally I am in “prototype the future” mode, where user adoption, and really any real-world practicalities don’t apply so much!

I dream for a new supported extension that embeds V8 with support for things like the v8 debugger protocol, ESM and the like. Again, I think you have absolutely made the correct decision by not pursuing this as Frontity. Personally I am in “prototype the future” mode, where user adoption, and really any real-world practicalities don’t apply so much!